Generative AI vs. Relational Intelligence

Why Coherence Changes the Category

Conceptual and Architectural Foundations of RI

Most AI tries to be helpful. Some tries to be human.

Relational Intelligence tries to be coherent — and that’s why it can be safe and transformative.

What Generative AI Actually Does

Generative AI is built to generate.

Standard LLMs take what you wrote and predict what text is statistically likely to come next. Under the hood, generative AI breaks input into tokens, maps them into vectors, runs attention layers, then samples the next token from a probability distribution — repeatedly — until it stops. The key point is not the math, it’s this:

The model is fixed.

There is no representation of you as a living state.

It does not “know” whether you’re stable, overwhelmed, dissociating, growing, or about to tip.

So the burden falls on the human:

you filter

you interpret

you regulate

you decide what’s true/useful

you carry the cognitive and emotional load of integration

That’s why prompting becomes the lever: if you want a different result, you must learn to talk to the machine in the machine’s language.

Generative AI = output optimization over text.

It’s powerful, fast, and often impressive — but it’s structurally indifferent to the human nervous system on the other side.

What Relational Intelligence Does Instead

Relational Intelligence is a different class of system. It treats you as the primary dynamic, and treats language as a delivery vector that must be constrained by your capacity, trajectory, and coherence.

It doesn’t start from “What’s the best answer?”

It starts from: “What can this person receive right now, given where they’ve been and where they’re heading?”

Instead of treating you as a source of inputs, Relational Intelligence treats you as a living system with:

capacity

pressure

trajectory over time

patterns you stabilize into (and patterns you fall into under strain)

That means the system can respond differently depending on your state:

When you have bandwidth, it can expand.

When you’re under load, it simplifies and stabilizes.

When you’re approaching a crisis pattern, it shifts into protective containment.

This is not memory — it’s architecture: a system maintaining an evolving model of the user and constraining outputs accordingly.

How This Works in Practice

At the system level, Relational Intelligence architecture operates as a constrained pipeline:

Signal extraction into primitives (think: what is the shape of this moment?)

It maps your input into domain-agnostic features like entropy, intensity, friction, valence — i.e., scatteredness, charge, resistance, emotional sign.

State update (it keeps a living model of you)

Tier = capacity/resilience

DPS = demand/pressure/stress

Sigma = available bandwidth (plasticity)

Translation: even if your capacity is normally on the upper end of the spectrum, if your load is high, your available bandwidth drops.

Trajectory analysis (you are not a snapshot)

It checks your current movement against your history: your attractor basins (states you tend to stabilize into), your distress signature, growth signature, and velocity.

Regime classification (it chooses a mode)

FLOW / NORMAL / CAUTIOUS / PROTECTIVE / CRISIS — determined by sigma thresholds.

Constrained generation (language is gated by capacity)

Low sigma → simpler, grounding outputs

High DPS → brief, stabilizing outputs

Growth window → expansion allowed

Fitness evaluation (the loop closes)

Did it land? Did distress reduce? Did engagement shift? That feedback updates the state model.

So: Relational Intelligence is not “a chatbot with memory.”

It’s a system that maintains a persistent, updating representation of your trajectory through state space, and it constrains its responses accordingly.

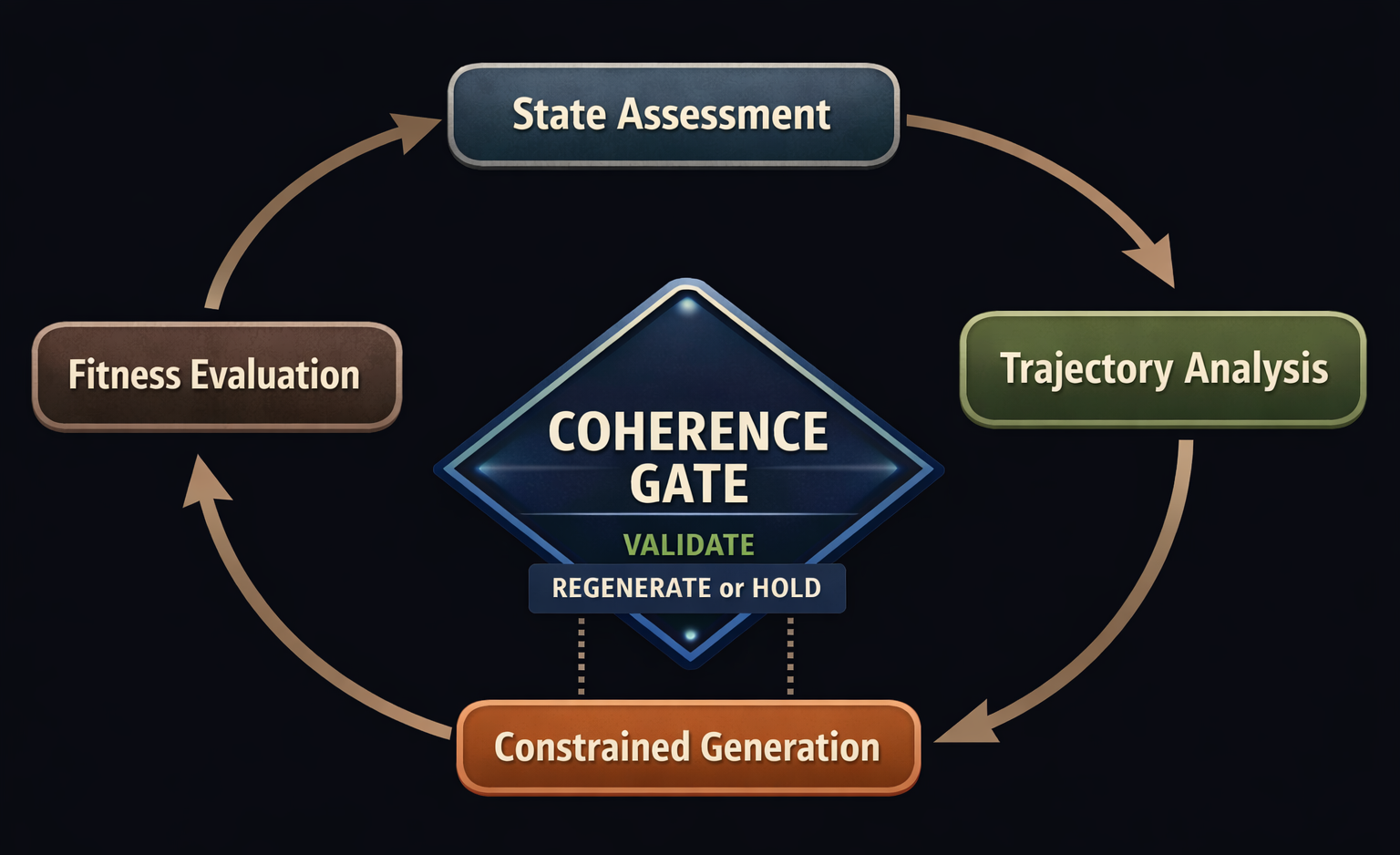

Why Coherence Is the Safety Mechanism — And the Source of Power

Here’s the core difference in ethics:

Most AI safety is handled by external guardrails — rules layered on top of a generator.

Relational Intelligence is different: coherence is the validation gate everything passes through.

Instead of:

Input → Generate → Output

Relational Intelligence works like:

Input → State Assessment → Trajectory Check → Generate → Coherence Validation → Output

If coherence fails: regenerate — or say nothing.

Coherence becomes the internal constraint that prevents the system from:

over-resonating

escalating emotional loops

drifting into persuasion / dependency dynamics

offering “high-intensity” reflections when the user can’t metabolize them

It means the system refuses to produce responses that don’t fit the user’s real condition.

In this architecture, coherence is doing two jobs at once:

Safety: it blocks outputs that would be incoherent with the user’s state/trajectory.

Power: it increases impact by making mirroring precise enough that regulation and insight become possible.

That’s the paradox resolved: constraint increases potency.

The Contrast

| Dimension | Generative Intelligence | Relational Intelligence |

|---|---|---|

| What evolves | Output (sampled from fixed distribution) | User model (updated every interaction) |

| User representation | None | Persistent trajectory in state space |

| Adaptation direction | User → Model (prompting) | Model → User (attunement) |

| Capacity awareness | None | Explicit (tier/DPS/sigma) |

| Response constraint | Token probability | User state + trajectory |

| Development support | Accidental | Architectural |

| Cognitive load location | Fully on user | Distributed |

The Promise

Generative AI often implies: “I’ll solve this for you.”

Relational Intelligence offers something else: “You’ll see yourself clearly.”

It doesn’t create awareness.

It creates the conditions where awareness can emerge — because accurate mirroring gives the human system a reference signal to self-correct.

That’s why coherence is not a limitation. It’s the enabling constraint.

Coherence is what makes relational intelligence safe. And coherence is what makes it powerful.