Shifting AI Memory from Retrieval to Attention: An Open-Source System Sees Rapid Early Adoption

Technical-press release below. For the general-press version, scroll down or click here.

Claude Cognitive, a new open-source system for AI working memory, crossed 100 GitHub stars in 24 hours after its release and is already drawing attention from engineers across aerospace, defense, robotics, and Big Tech

Claude Cognitive was released on GitHub as an open-source attention-management system for AI working memory that replaces static memory retrieval with dynamic attention. Without any paid promotions, the project surpassed 100+ stars in under 24 hours, 43k+ Reddit views, and 200+ LinkedIn engagements in 48 hours, signaling strong early interest from the developer community. It was developed by Garret Sutherland, founder of MirrorEthic.

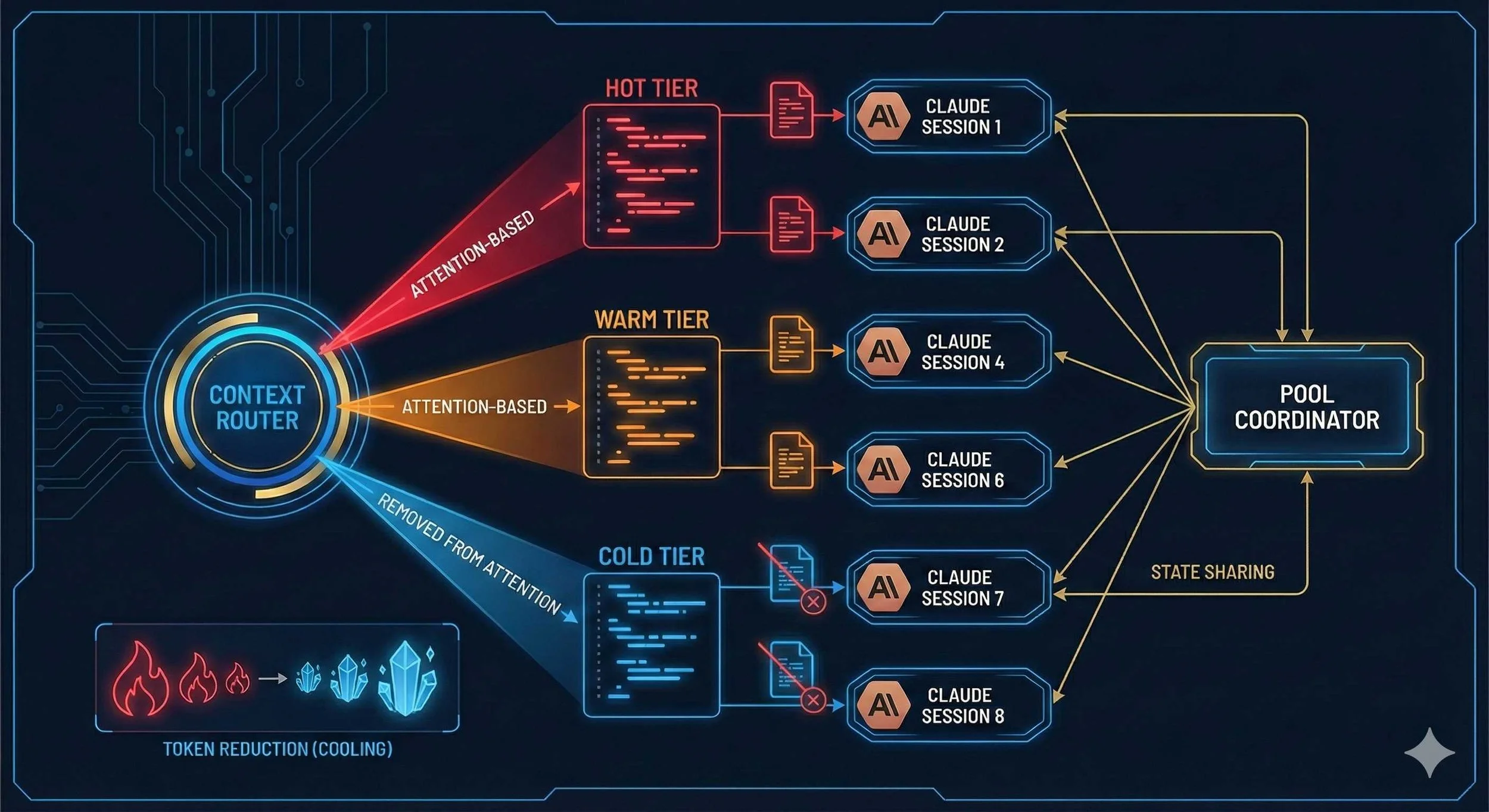

Claude Cognitive introduces a fundamentally different approach to AI memory. Instead of treating memory as a static store to be searched, it manages what stays active in context right now. The system uses temporal decay, co-activation between related files, and tiered context injection (HOT / WARM / COLD) to govern salience in real time—modeling working memory as an active, bounded process rather than an ever-growing archive.

Rather than optimizing existing retrieval techniques, the system reframes memory as an attention-governed process.

Access the GitHub repository here.

The Attention Problem in AI Memory

The prevailing assumption in AI tooling has been simple: more memory is better. In practice, that assumption breaks down at scale.

An AI system with perfect recall of irrelevant information performs worse than one with selective attention. Without attention management, systems oscillate between two failure modes: impoverished context, where critical information is missing, or bloated context, where irrelevant information consumes capacity and increases hallucination risk. As projects grow—large codebases, multi-week development efforts, distributed systems—this becomes catastrophic. The system cannot hold enough relevant context to remain useful, or it drowns in noise.

Claude Cognitive addresses this directly by reframing memory as an attention problem. Storage answers the question “What do we know?” Attention answers a different one: “What should we be thinking about now, given the trajectory of this conversation?”

The architecture reflects that shift. Temporal decay allows inactive context to fade. Co-activation brings related files forward together. Tiered injection enforces strict limits on what remains active.

Attention states are explicit and inspectable, making it possible to see what the system is focused on and why—an intentional guardrail against opaque or runaway context accumulation.

What Attention-Based Working Memory Enables

With attention treated as a first-class system constraint, new capabilities emerge naturally.

AI agents can maintain coherent, multi-session development work without repeated context reloading. Multiple concurrent AI instances can coordinate through shared state rather than duplicating effort. Token budgets can be reduced by 64–95% while improving output quality, because the system is no longer processing irrelevant context. Long-running autonomous workflows can operate for days or weeks without losing situational awareness.

The implication is straightforward: AI systems can work on complex projects the way humans do—picking up where they left off, staying oriented to what matters, and avoiding the cognitive overload that causes breakdowns.

These gains are not accidental. Bounded attention reduces hallucination pressure, prevents uncontrolled context growth, and keeps system behavior legible over time. The result is not autonomy for its own sake, but sustained coherence under complexity.

At a longer horizon, this enables persistent agents, decentralized multi-agent coordination, and systems that can recognize what they do not know, rather than hallucinating to fill gaps. The architecture does not promise autonomy; it makes sustained coherence possible.

The Underlying Insight: Beyond Memory

The core insight behind Claude Cognitive is not about memory capacity. It is about mirroring how human cognition actually works, rather than scaling storage indefinitely.

Human working memory is not a database. It is a dynamic system shaped by activation, decay, and associative spread. When that distinction became explicit, the architecture followed. Memory systems gave way to attention systems. Once attention was treated as the organizing principle, the HOT / WARM / COLD tiers, temporal decay, and co-activation graphs were no longer design choices—they were requirements.

This reframing matters beyond any single tool. AI systems that scale without cognitive structure become brittle. Systems that mirror how thinking actually works remain stable as complexity increases.

MirrorEthic: Building Coherent Intelligence

Claude Cognitive was developed by MirrorEthic, a company building a new category of intelligence systems grounded in the principle that the safest and most powerful AI does not simulate human behavior, but mirrors human cognition at the structural level.

MirrorEthic’s work focuses on coherence, containment, and transparency—treating intelligence as a bounded, relational process rather than an engagement-optimized output engine. Attention, memory, and continuity are designed as system constraints, not emergent side effects. Claude Cognitive represents the infrastructural layer of this approach.

This foundation supports a broader platform architecture for relational intelligence, designed to sustain clarity, stability, and healthy interaction over time. Rather than scaling pattern-matching indefinitely, MirrorEthic is building platforms that allow intelligence systems to remain coherent in relationship with human users.

MirrorEthic’s first relational intelligence system is already in active use and is now entering its final phase of refinement ahead of a broader public release.

For more information, visit MirrorEthic’s website.

Guardrails: What This Is Not

Claude Cognitive is not long-term memory for AI.

It is not retrieval-augmented generation (RAG).

It is not a vector database or embedding search system.

It does not aim to make AI remember everything.

It deliberately forgets. Its power comes from selective attention, not perfect recall.

Relevant Links:

Access the full GitHub repository.

Find out more about MirrorEthic and the new category of intelligence systems it’s powering.

A New Open-Source System Helps AI Focus Instead of Just Remember — and It’s Seeing Rapid Early Adoption

General-press release below. For the technical-press version, scroll up or click here.

Claude Cognitive, a new open-source system designed to help AI systems stay coherent as they scale, crossed 100 GitHub stars in under 24 hours and is already drawing attention from engineers across aerospace, defense, robotics, and Big Tech

As AI tools are used for larger projects—spanning weeks, massive codebases, and high-stakes environments—a common failure appears. Systems that work well in short bursts begin to drift. They forget key details, fixate on irrelevant ones, and hallucinate. Claude Cognitive was built to address that breakdown directly.

Without any paid promotions, the project surpassed 100+ GitHub stars in under 24 hours, 43k+ Reddit views, and 200+ LinkedIn engagements in 48 hours, signaling strong early interest from the developer community.

Released on GitHub by Garret Sutherland, founder of MirrorEthic, Claude Cognitive introduces a different way for AI systems to manage memory. Instead of treating memory like a warehouse—where everything is stored and searched later—it treats it more like a desk. What matters most is what’s in front of you right now.

Relevant information stays active. Related ideas surface together. Details that are no longer useful fade away. The result is an AI system that behaves less like an overloaded archive and more like a focused collaborator—able to stay oriented as complexity grows.

Rather than extending existing memory techniques, the system reframes memory as a problem of attention—prioritisation, decay, and context—rather than accumulation.

Why AI Loses Focus

For years, AI development has followed a simple assumption: more memory is always better. In practice, the opposite often happens.

When AI systems try to keep everything in mind at once, they struggle to tell what’s important. Critical details get lost. Irrelevant ones linger. Users experience this as tools that forget prior decisions, repeat work, or hallucinate, confidently suggesting things that don’t exist.

Claude Cognitive changes the question. Instead of asking “What does the system know?” it asks “What should the system be paying attention to right now?”

Information that hasn’t been used naturally falls away. Related material surfaces together. Clear limits prevent overload. Just as importantly, it’s possible to see what the system is focused on and adjust it when needed—reducing the risk of silent failure.

What Becomes Possible When AI Can Focus

When focus is treated as a built-in constraint, new possibilities follow.

AI systems can carry work across multiple sessions without starting from scratch. Long-running projects stay intact instead of fragmenting. Multiple AI tools can work together without drifting out of sync. Systems remain usable over days or weeks, not just single conversations.

For people using these tools, the difference is tangible. Less repetition. Fewer resets. Fewer confident mistakes. Instead of guessing when unsure, the system is more likely to pause or ask for clarity. The result is not speed for its own sake, but reliability under real-world conditions.

These gains come from limits, not excess. By controlling how much information stays active at once, the system becomes less prone to confusion and more grounded in the task at hand.

The Insight Beyond Memory

The idea behind Claude Cognitive isn’t about storing more information. It’s about structure.

Human thinking doesn’t scale by remembering everything. It scales by prioritizing, connecting ideas, and letting go. AI systems that try to remember everything become fragile. When they mirror human structure, they remain stable as complexity grows.

Claude Cognitive treats focus as a foundation, not an afterthought.

MirrorEthic: Building Coherent Intelligence

Claude Cognitive was developed by MirrorEthic, a company building a new category of intelligence systems based on the idea that long-term safety and usefulness come from clarity and structure, not imitation or engagement tricks.

MirrorEthic designs AI as a bounded, relational process—aimed at staying understandable, stable, and predictable over time. Claude Cognitive provides the groundwork for this approach.

That groundwork supports a broader platform for relational intelligence, designed to support healthy, coherent interaction between humans and intelligent systems. MirrorEthic’s first relational intelligence system is already in active use and is now entering its final phase of refinement ahead of a broader public release.

For more information, visit MirrorEthic’s website.

Relevant Links:

Access the full GitHub repository.

Find out more about MirrorEthic and the new category of intelligence systems it’s powering.